ChatGPT and sensitive data : What your company can no longer ignore.

With over 13 million monthly visitors in France, ChatGPT has firmly established itself in daily usage. Used as a virtual assistant, search engine, or writing tool, it is now an integral part of work environments—often without any clear supervision.

But this accessibility comes at a cost: data confidentiality. Behind the smooth interface lies a system based on machine learning, which uses entered queries to refine its responses. In other words: what you input, it records, remembers, and reuses.

A critical question: What does ChatGPT do with your data ?

If you share sensitive information in a query—client names, internal procedures, confidential files—you risk seeing that data resurface in other generated responses. Certainly, OpenAI states that it does not sell this data to third parties and mainly uses it to improve the model’s performance. But the line between “improvement” and “leak” is a thin one.

The textual data you enter—as well as geolocation, account info, browsing history, and cookies—is stored on secure servers… located in the United States. In the age of GDPR and digital sovereignty, this raises clear legal questions for European businesses.

The illusion of confidentiality: when AI talks too much.

In March 2024, a security flaw exposed users’ private conversation titles and payment information linked to ChatGPT Plus subscriptions. Even though the incident lasted only nine hours, it was enough to expose data from 1.2% of users. No credit card numbers, true—but names, addresses, and card types were revealed. Ultra-sensitive and highly confidential data.

And this isn’t an isolated case. A recent study reveals that ChatGPT, when prompted with certain trick queries, can reveal data from its initial training. Among the exposed items: names, phone numbers, emails—and sometimes even the contact details of business executives.

For instance, in 2023, Samsung engineers copied and pasted confidential source code into ChatGPT to identify bugs. The result: this data became part of the model’s usable dataset, causing an internal crisis and leading the Korean giant to completely ban the tool.

In the same vein, a June 2025 IFOP-Talan survey shows that 68% of employees using ChatGPT at work do not inform their management. This autonomy may seem efficient—until it exposes legal documents, confidential agreements, or business data to an AI connected to external servers.

Even anonymized, this data can feed into other responses generated by the tool. Imagine: what your team types today could, tomorrow, help a competitor craft their strategy. Your method, your organization, your expansion plans—these are all competitive advantages you might unintentionally give away.

An analysis of a 100,000-user sample revealed over 400 sensitive data leaks in just one week: legal documents, internal memos, source code… Once exposed, this content cannot be erased from the network. The consequences go far beyond embarrassment: GDPR fines, potential lawsuits, loss of trust, damaged reputation.

How to protect your data without giving up AI ?

At ATI4, we made a clear choice: to strictly regulate the use of generative AI internally, to protect our data and that of our clients.

This involves training, security charters, but also technical restrictions: allowed data types, access control, isolated environments. We choose to never send sensitive data to a non-sovereign model.

We are also exploring alternatives that better respect European frameworks. In 2024, Mistral AI, a French startup backed by €400 million in funding, launched an open-source model hosted on private infrastructure. A generative AI designed to comply with GDPR requirements.

Don’t fall into the trap of thinking a tool is “without consequences.” Every message you write is data transmitted, stored, and analyzed. In sensitive sectors—finance, energy, defense, healthcare—even a poorly phrased query can become a cybersecurity vulnerability. Don’t leave your strategy in the hands of an opaque algorithm. Confidentiality is not optional—it’s a responsibility.

Find out what’s new at the company.

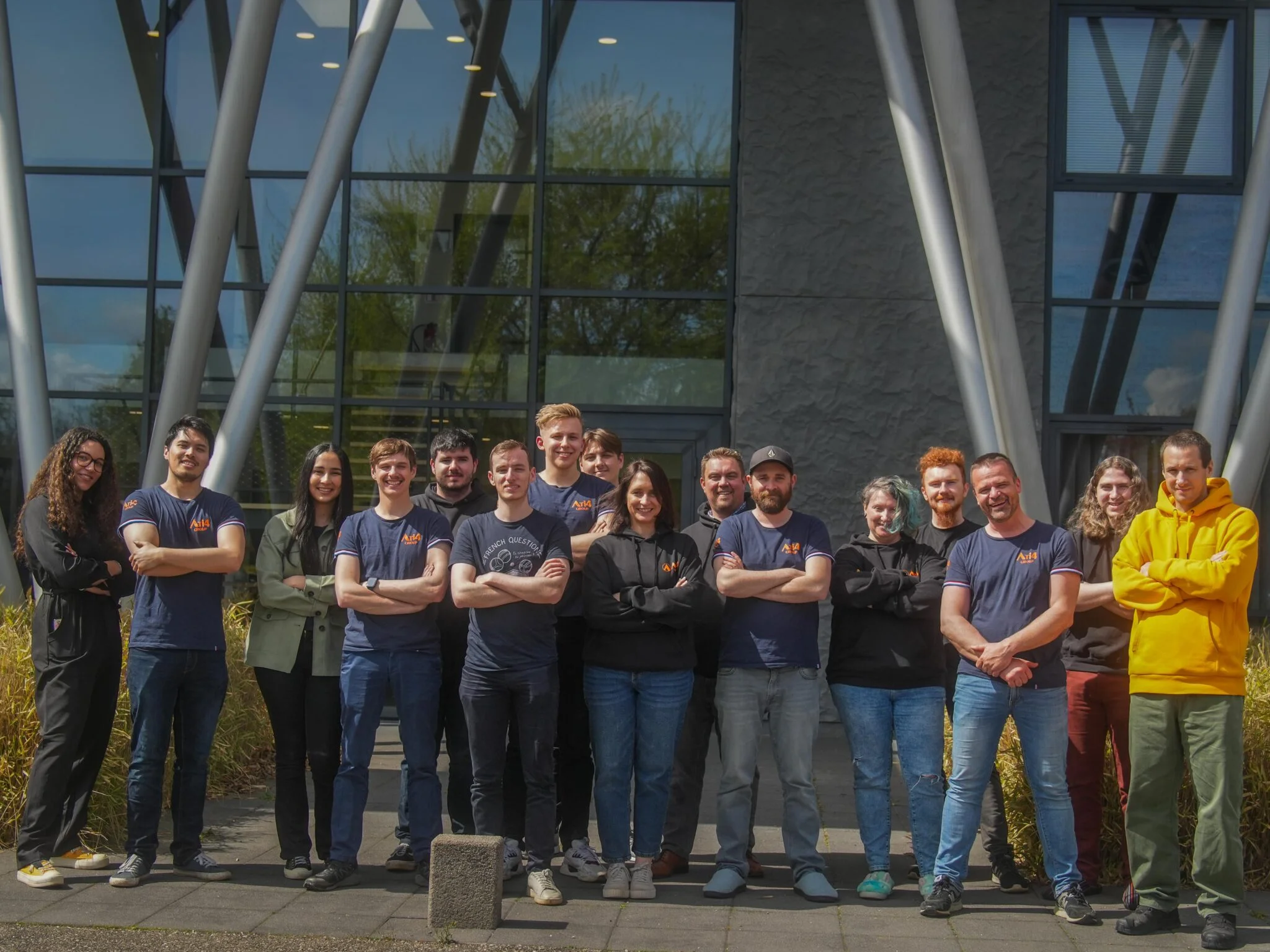

Because mixing fun and work is at the heart of our philosophy, we always try to make a special place for it in our business life.

Discover why the data-strategy-AI triangle is now the engine of B2B e-commerce performance, and how to apply it concretely to build sustainable competitive advantage.

At the end of January 2025, we officially earned our Gold badge as an Upsun partner! A great recognition of our technical expertise and our commitment to supporting you in transforming your hosting infrastructures. Let us explain what this means concretely for your projects.

Discover how a PIM (Product Information Management) can boost your SEO by centralizing product information, automating multi-channel distribution, and enhancing organic visibility.

Why a high-performance CMP is a strategic investment for Magento B2B e-commerce, ensuring GDPR compliance, data quality, and marketing performance.

Learn how the CURL framework strengthens the credibility, utility, reputation, and loyalty of your B2B brand in the age of artificial intelligence. A strategic roadmap to becoming the trusted reference AI systems naturally recommend.

Discover how artificial intelligence is revolutionizing e-commerce, from hyper-personalized shopping experiences and 24/7 virtual assistants to predictive inventory management and fraud detection. Learn how AI reshapes customer interactions and optimizes operations for a tailored, efficient, and engaging shopping journey.

Optimize your checkout funnel with better delivery and payment experiences. Learn how to reduce cart abandonment, increase conversions, and create a seamless and efficient customer journey.

E-commerce accessibility isn’t optional—it’s the law. Avoid €20K fines and tap into a $13T market by fixing these 5 critical issues (hint: your carrousel is likely one).

In 2026, e-commerce is entering a phase of profound transformation. After two decades of almost uninterrupted growth, the European market—especially in France—has reached a stage of maturity where performance is no longer driven solely by traffic acquisition or conversion rate optimization.

Learn how to combine growth hacking and SEO to boost visibility, increase qualified traffic, and turn your website into a true growth engine.

Websites have a limited lifespan (often 3 to 5 years) before they require a refresh. Technology evolves, user behavior changes, and expectations regarding design and digital experience continue to grow. However, “redesign” does not always mean the same thing.

During Meet Magento, held in the Netherlands in early November 2025, Willem Wigman, the founder of Hyvä, made official a decision that is shaking up the Magento ecosystem: the transition of the theme to an open source license. This announcement marks a decisive turning point in the company’s strategy and redefines the platform’s accessibility for the entire e-commerce community.

In today’s context, every millisecond gained on your site matters. Optimizing your web performance is a real advantage for customer experience.

Matomo 5.5.0 marks a turning point for web analytics. This version introduces features that allow you to better understand traffic from emerging digital sources, particularly AI assistants such as ChatGPT, Copilot, Gemini, or Claude.

Web writing increasingly borrows techniques from journalism, and one of the most effective for capturing attention is the inverted pyramid.

How to Celebrate Our 5th Anniversary? Here’s a recap of our trip to Spain, in Palma de Mallorca.

Personal branding has become a key lever in building your marketing strategy. Indeed, it’s no longer just about selling your product or service — it’s about selling a story, a vision, and a personality around your brand.

In B2B and B2C e-commerce, the effectiveness of product pages relies on the notion of copywriting, which is the art of writing texts that inform, engage, and drive action.

For four years, the Shopper Trends barometer has reflected the evolution of purchasing behaviors and consumer expectations. And in 2025, the French are reinventing their shopping journeys.

In the B2B context, new skills are essential. And at the heart of this strategy, one element plays a key role: the product sheet.

In the current economic context, it’s difficult to convince that brand awareness deserves its place among marketing priorities. And yet, SEO remains a major lever for B2B companies.

We operate in a fragmented ecosystem, where consumers can juggle an infinite number of information sources, different platforms, and touchpoints before making a decision. It is a disordered, unpredictable cycle, yet one that is decisive in the final purchase decision: the Messy Middle.

For decades, the famous conversion funnel was enough to understand and interpret consumer behavior. But in 2025, consumer habits have radically changed, in line with new consumption trends.

Today, it’s impossible to ignore the power of video content in a marketing strategy. We break it all down in our article.

With new buyer expectations and the transformation of usage patterns, B2B e-commerce has become a growth driver.

The B2B journey is more thoughtful, longer, often interrupted, but rarely insignificant. When a user adds a product to their cart, they demonstrate genuine interest in the brand and/or its product. An abandoned cart is therefore not necessarily an end in itself, but can be the beginning of a purchase intention.

Product information is vast, so how to manage, structure, and distribute it without friction ?

When we think of e-commerce, we often picture a consumer comfortably seated on their couch, placing an order from a computer or smartphone. Yet, another reality of e-commerce deserves our full attention: B2B.

Today’s professional buyers, well-informed and accustomed to seamless B2C interfaces, now expect customized purchasing journeys that are relevant, efficient, and tailored to their business needs.

The Google Core Update of June 2025, officially launched on June 30, marks a new milestone in the evolution of the search engine and organic search.

If aiming for the moon seems unrealistic, there is a strategic, more precise, and above all, much more profitable long-term approach: that of long-tail keywords.

According to the latest industry data, more than 66% of businesses say they plan to expand internationally in the coming years.

Far from being just communication showcases, these social platforms are now real sales channels.

Starting June 28, 2025, new digital accessibility standards will come into effect, directly impacting e-commerce websites in France. This legal obligation no longer applies only to public platforms—private companies are now included.

In France, the RGAA consists of 106 compliance criteria grouped into 13 fundamental themes that guide the audit of your platform.

Over the past several months, Hyvä has been revealing a series of technical and strategic innovations that are set to transform the entire Adobe Commerce ecosystem.

TikTok, originally known for short, entertaining videos, has recently launched TikTok Shop, a feature that could very well redefine the rules of the e-commerce game.

Agencies, software publishers, digital experts and all players in the digital space—you’ve likely heard the news. Starting June 28, 2025, all e-commerce services will be required to comply with new digital accessibility regulations.

The Speculation Rules API allows browsers to pre-render pages that a user is likely to visit, providing a smoother and faster browsing experience.

Through the AI Tour of France, organized by Medef and Numeum, French companies shared their experiences with AI and the concrete benefits they have gained, as part of the “AI Action Summit.”

In a world where e-commerce has become a cornerstone of the global economy, B2B and B2C sales models represent two essential facets of the system.

The PIM, or Product Information Management solution, is a tool that collects your product data and integrates it directly into the most suitable format for your team, all within a single digital platform.

Design thinking is a methodology that emerged in the 1970s and gained momentum in the 1980s with applications across various sectors, including e-commerce.

The Gen-Z consists of individuals born between 1997 and 2012 and now represents a significant sector of the digital market.

Millions of internet users conduct searches every day via various search engines, whether to find answers to their questions, a restaurant, a specific item, or a schedule.

The Gorilla Club, part of ASPTT Strasbourg, is a sports section dedicated to promoting and teaching American football in Alsace.

These marketing techniques encourage buyers to complete their carts with complementary products to their initial items. Although often confused, these two sales techniques have distinct objectives and can be very effective when implemented correctly.

C2C sales, or “customer to customer,” represent a major evolution in the e-commerce landscape. In this model, individuals are the main players, using specialized sites or applications to sell and buy goods or services among themselves.

Sales funnels, known as “sales funnels” in English, refer to a technique aimed at maximizing conversion rates to sell more effectively online.

In today’s digital landscape, customer reviews—whether positive or negative—inform consumers about the quality of the products or services they are considering purchasing. Positive reviews can significantly boost sales by influencing the purchasing decisions of other consumers.

The new generation spends a lot of time on social media, which has led to the emergence of new professions, notably that of influencer or content creator.

Cross-border e-commerce has transformed the global commercial landscape. This ability to sell anywhere and anytime has significantly boosted companies’ revenues.

Nowadays, social media play a prominent role in our lives. Some use them to share their daily lives, others to spread messages, and some simply to stay in touch with their loved ones or to stay informed about the news.

ChatGPT, this new artificial intelligence tool, allows for the rapid creation of content. Launched by OpenAI in 2022, its goal is to “help by answering your questions and providing information.” Impressive, right?

Soft skills play an equally important role in the success and sustainability of your digital platform. They can transform the way you interact with your customers, your team, and your market.

Over the years, the focus on user experience (UX) and user interface (UI) has been constant, but today, we are increasingly moving towards another equally crucial concept: customer experience (CX).

Virtual Reality (VR) is a rapidly growing technology that is disrupting many sectors, including e-commerce.

Product listings are the cornerstone of the commercial strategy. They play a crucial role in converting visitors into customers as they provide detailed information about the products offered by a company. What are the key points to watch out for and the best practices to optimize them and maximize sales?

The functionalities, costs, and levels of complexity can vary from one CMS to another.

When it comes to optimizing your website’s performance, paying attention to images is a crucial yet often overlooked step.

The dynamics of e-commerce are evolving rapidly, with the primary goal of businesses being to provide an optimal customer experience to maximize conversion rates and, most importantly, sales. For the past two years, a new solution has been making waves: it’s the Hyvä solution and its Hyvä Reset theme.

In the dynamic universe of online marketing and sales, the conversion rate emerges as a key indicator, reflecting the performance and success of a strategy.

In the world of marketing, efficiency reigns supreme.

The e-commerce industry continues to grow at a rapid pace, driven by technological innovation, changes in consumer behavior, and evolving market expectations.

In a constantly evolving digital landscape, it is crucial to ensure that your website is performing well, secure, and meeting the needs of your users.

The realm of web marketing plays a central role, where the scope of digital strategy may vary, but merely existing on the web is equivalent to an active approach to online promotion.

In today’s digital era, Web Marketing has become an indispensable element for businesses aiming to expand their online product and service offerings.

In the current context of e-commerce and marketing communication, the engagement rate has become a crucial indicator for measuring user interaction and involvement with content or a brand.

The sales, that long-awaited event for consumers in search of good deals and discounts. But this event is also a major date in the calendars of e-commerce websites.

Branding and e-commerce have been continuously evolving, particularly since the expansion of the internet.

In a digital world where competition is fierce, the technical optimization of a website is a crucial element to ensure a smooth user experience, enhance performance, and strengthen its search engine ranking.

Online commerce is in constant evolution, and the security of your website, whether you are a small business or a major brand, must be a top priority. E-commerce websites handle vast amounts of data to personalize their product and service offerings, tailoring them to the needs of their target audiences.

We celebrated our third birthday last June 1st…. Yep, so soon! 🥳

With Magento 2, enhancements and new features have been introduced, thus providing businesses with optimized site management.

We recently conducted an internal study to assess our ATI4 employer brand and highlight areas for improvement.

A year ago, we hired 4 developers to join our Magento Academy. We aimed to train them to use this new technology.

Last November, ATI4 joined forces with Numéric Emploi Grand-Est to host its first Apéro Dev event.